Google’s new processor is set to transform the future of artificial intelligence.

Google recently announced the launch of its new artificial intelligence models, Gemini, accompanied by the release of the latest version of its flagship tensor processing unit (TPU) for AI training and inference. This move is seen as Google’s attempt to compete with Nvidia’s market-leading graphics processing units (GPUs).

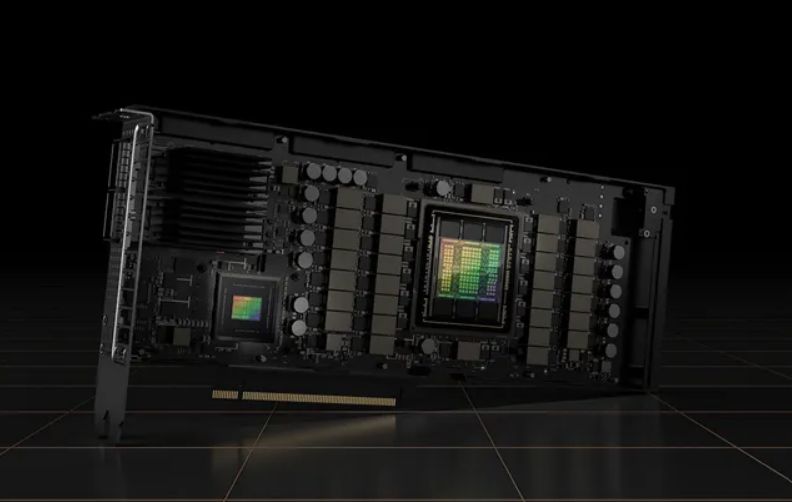

The TPU v5p, Google’s most powerful AI accelerator, has been deployed for the “AI Hypercomputer.” This supercomputing architecture is specifically designed for running AI applications, unlike traditional supercomputers typically used for scientific computations.

The latest version of the TPU includes 8,960 chips per node (part of the system), compared to 4,096 in the v4 version, and is four times more scalable in terms of FLOPs availability per node. The new nodes provide a throughput of 4,800Gbps and have 95GB of high-speed memory (HBM) compared to 32GB of HBM RAM in the TPU v4.

A key difference between Nvidia’s H100 and Google’s TPU v5p is in speed: Google does not offer its TPUs for purchase to other companies; they are used exclusively within the company for its own products and services. Google’s TPUs have long been used to support services like Gmail, YouTube, and Android, and the latest version has also been used to train Gemini.

The TPU v5p from Google trains large language models 2.8 times faster than the TPU v4 and offers 2.1 times more value for money. While the intermediate version, TPU v5e, released earlier this year, offers the greatest value, being 1.9 times faster than the TPU v4, this makes the TPU v5p the most powerful option.

The TPU v5p is even powerful enough to compete with Nvidia’s highly sought-after H100 GPU, one of the best graphics cards for AI work. This component processes workloads four times faster than Nvidia’s A100 GPU, according to company data.

Meanwhile, Google’s TPU v4, according to a study published in April, is 1.2-1.7 times faster than the A100. Preliminary calculations show that the TPU v5p is approximately 3.4-4.8 times faster than the A100, putting it on par with or even surpassing the H100, although more detailed testing is necessary for definitive conclusions.

Expanding on this, the development of Google’s TPU v5p signifies a major leap in AI hardware, showcasing the increasing importance of specialized hardware for AI and machine learning tasks. This progression aligns with the broader trend in the tech industry towards hardware that is optimized for specific computational tasks, particularly as AI models become more complex and demanding. Google’s focus on AI-specific supercomputing with the AI Hypercomputer highlights the growing need for infrastructure that can handle the massive computational requirements of modern AI systems. This trend is likely to continue as AI becomes increasingly integrated into various industries, requiring more powerful and efficient processing capabilities. The competition between Google and Nvidia in this space also indicates a healthy and dynamic market, driving innovation and potentially leading to more advanced and cost-effective AI technologies in the future.